Docker:

Docker is a powerful platform that revolutionizes the way applications are developed, tested, and deployed. At its core, Docker is a containerization technology that provides a lightweight and portable environment for applications to run consistently across various environments. Here's a detailed explanation of Docker:

Containerization and Docker's Foundation:

Docker leverages containerization, a technology that encapsulates an application and its dependencies into a single unit known as a container. Unlike traditional virtualization, which involves running entire virtual machines, containers share the host OS's kernel, making them more lightweight and efficient. Docker containers include the application code, runtime, libraries, and system tools, ensuring that they can run consistently on any system that supports Docker.

Key Components of Docker:

Docker Engine:

- The core component of Docker that manages containers. It includes a server, REST API, and a command-line interface for interacting with Docker.

Docker Images:

- Images are the building blocks of Docker containers. They are lightweight, standalone, and executable packages that contain everything needed to run an application, including the code, runtime, libraries, and system tools.

Docker Containers:

- Containers are instances of Docker images. They provide an isolated environment for applications to run, ensuring consistency and portability.

Advantages of Docker:

Portability:

- Docker containers run consistently across different environments, eliminating the infamous "it works on my machine" problem. This portability simplifies the development and deployment process.

Efficiency:

- Containers share the host OS's kernel, making them more lightweight than traditional virtual machines. This efficiency allows for faster startup times and optimal resource utilization.

Isolation:

- Containers provide isolation for applications, ensuring that they do not interfere with each other. This isolation enhances security and allows for the deployment of multiple applications on a single host.

Scalability:

- Docker facilitates easy scaling of applications. Containers can be quickly started or stopped, and orchestration tools like Docker Swarm or Kubernetes can manage large-scale deployments seamlessly.

Versioning and Rollback:

- Docker images support versioning, enabling developers to roll back to previous versions if issues arise. This version control enhances the reliability of deployments.

Microservices Architecture:

- Docker aligns well with microservices architecture, allowing developers to break down monolithic applications into smaller, manageable components. Each microservice can run in its own container.

Use Cases:

Application Development:

- Docker simplifies the development process by providing a consistent environment for developers, reducing the likelihood of deployment issues.

Continuous Integration/Continuous Deployment (CI/CD):

- Docker is integral to CI/CD pipelines, ensuring that applications are tested and deployed in a consistent environment.

Microservices:

- Docker facilitates the implementation of microservices architecture, allowing for the development and deployment of independent, modular components.

Hybrid and Multi-Cloud Deployments:

- Docker's portability makes it suitable for hybrid and multi-cloud environments, where applications need to run seamlessly across diverse infrastructures.

Docker Automation:

Docker automation refers to the process of streamlining and orchestrating various aspects of working with Docker containers through the use of automated tools, scripts, and workflows. Automation in the context of Docker is essential for managing the lifecycle of containers, deploying applications, ensuring consistency across different environments, and optimizing operational processes. It involves leveraging tools and practices to make containerized application development, deployment, and management more efficient, reliable, and scalable.

Key Aspects of Docker Automation:

Build Automation:

- Automation starts with building Docker images. Developers use Dockerfiles to define the configuration of their applications and dependencies. Automated build systems, such as continuous integration (CI) tools like Jenkins, GitLab CI, or GitHub Actions, can trigger image builds whenever changes are pushed to version control systems. This ensures that new code changes are automatically tested and packaged into Docker images.

Container Orchestration:

- Docker automation often involves orchestrating the deployment, scaling, and management of containers. Orchestration tools like Docker Swarm, Kubernetes, and Amazon ECS automate the distribution of containers across a cluster of machines, handle load balancing, manage resource allocation, and ensure high availability.

Configuration Management:

- Automating the configuration of Docker containers ensures consistency across different environments. Tools like Ansible, Puppet, or Chef can be used to automate the setup and configuration of Docker hosts and the deployment of Docker images.

Continuous Deployment:

- Docker automation is a key component of continuous deployment pipelines. Automated deployment scripts or CI/CD tools deploy Docker containers to various environments, including development, testing, staging, and production, ensuring a smooth and standardized deployment process.

Monitoring and Logging:

- Automation is crucial for monitoring the health and performance of Dockerized applications. Automated monitoring tools, such as Prometheus or Grafana, can be configured to collect and visualize metrics from Docker containers. Log aggregation tools like ELK (Elasticsearch, Logstash, Kibana) automate the collection and analysis of container logs.

Scaling:

- Docker automation allows for dynamic scaling of applications based on demand. Orchestration tools can automatically scale the number of container instances up or down, ensuring optimal resource utilization.

Security Scanning:

- Automated security scanning tools can be integrated into the Docker image build process to identify vulnerabilities in dependencies. This helps ensure that only secure and compliant containers are deployed.

Backup and Recovery:

- Automation plays a role in implementing backup and recovery strategies for Docker containers. Automated scripts or tools can be used to create regular backups of container data and configurations.

Benefits of Docker Automation:

Consistency: Ensures that the development, testing, and production environments are consistent, reducing the likelihood of issues related to environment discrepancies.

Efficiency: Streamlines repetitive tasks, reduces manual intervention, and accelerates the deployment process.

Scalability: Facilitates the dynamic scaling of applications in response to varying workloads.

Reliability: Reduces the risk of human errors and ensures that deployments are carried out consistently.

Standardization: Enforces standardized practices across the container lifecycle.

Docker automation is an integral part of modern DevOps practices, where the goal is to automate the entire software development and delivery pipeline to achieve agility, speed, and reliability in the software development lifecycle.

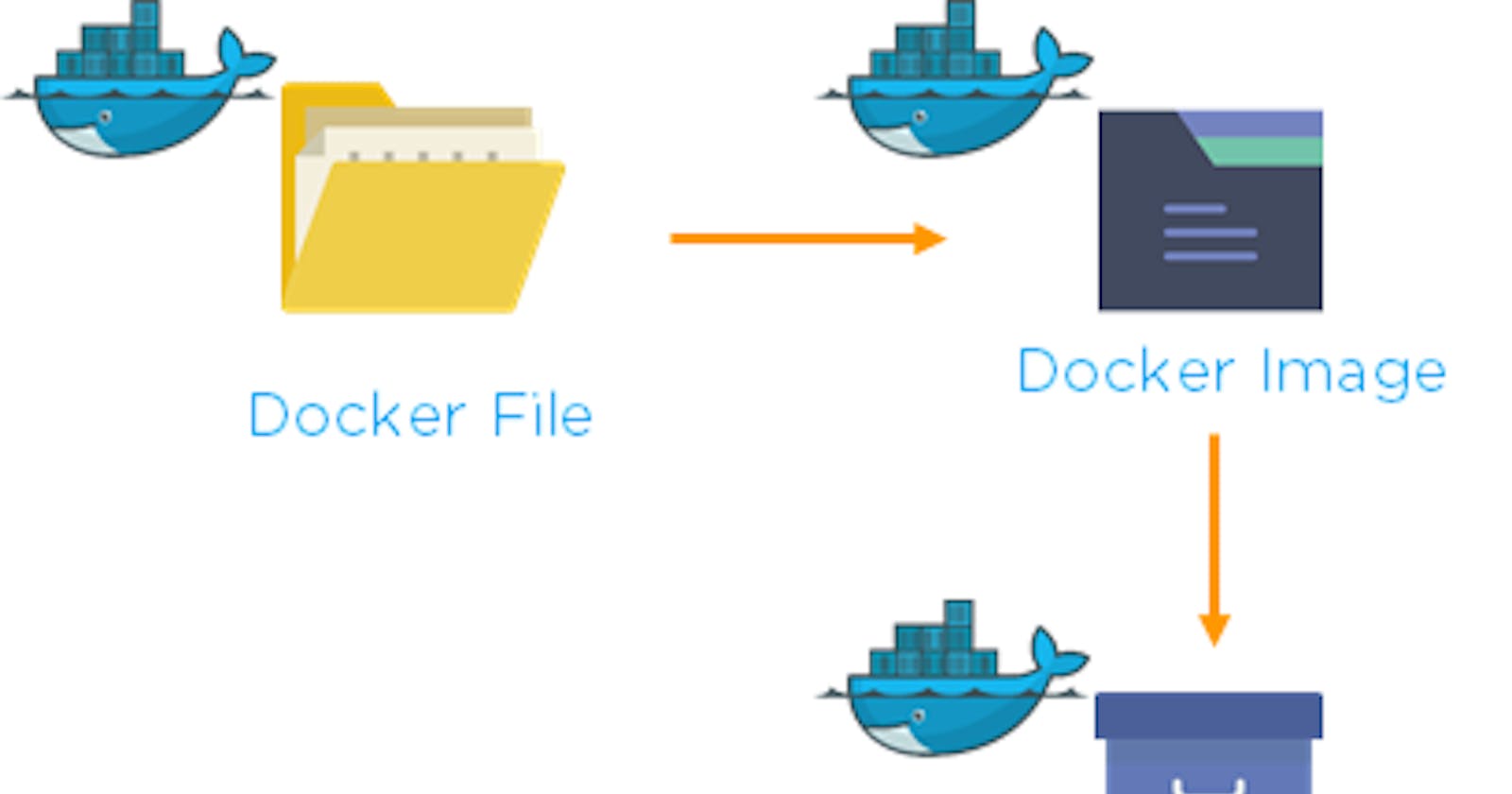

Docker File:

A Dockerfile is a text document that contains a set of instructions for building a Docker image. Docker uses these instructions to automate the process of creating a containerized application environment. The Dockerfile specifies the base image, adds application code, and configures the environment, enabling developers to define a reproducible and standardized setup.

Here are the key components and concepts related to Dockerfiles:

Components of a Dockerfile:

Base Image:

The Dockerfile typically starts with specifying a base image from which the application will be built. This base image serves as the foundation and includes the operating system and some pre-installed software. For example:

FROM ubuntu:latest

Instructions:

- Dockerfiles consist of a series of instructions that define how to set up the environment and configure the application. Common instructions include

RUNfor executing commands,COPYfor copying files, andCMDfor specifying the default command to run when the container starts.

- Dockerfiles consist of a series of instructions that define how to set up the environment and configure the application. Common instructions include

Working Directory:

It's common to set a working directory within the container to organize files and execute commands in a specific path. For example:

WORKDIR /app

Copying Files:

The

COPYinstruction is used to copy files from the host machine into the container. This is often used to include application code, configuration files, or other dependencies:COPY . /app

Running Commands:

The

RUNinstruction is used to execute commands during the image-building process. This can include installing packages, setting up dependencies, or any other necessary tasks:RUN apt-get update && apt-get install -y python3

Exposing Ports:

The

EXPOSEinstruction informs Docker that the container will listen on the specified network ports at runtime. It does not actually publish the ports:EXPOSE 80

Defining Default Command:

The

CMDinstruction sets the default command to run when the container starts. It can include the command and any arguments. For example:CMD ["python", "app.py"]

Example Dockerfile:

# Use an official Python runtime as a base image

FROM python:3.8-slim

# Set the working directory to /app

WORKDIR /app

# Copy the current directory contents into the container at /app

COPY . /app

# Install any needed packages specified in requirements.txt

RUN pip install --no-cache-dir -r requirements.txt

# Make port 80 available to the world outside this container

EXPOSE 80

# Define environment variable

ENV NAME World

# Run app.py when the container launches

CMD ["python", "app.py"]

Building an Image from Dockerfile:

To build a Docker image using a Dockerfile, you run the following command in the same directory as your Dockerfile:

docker build -t image_name:tag .

This command builds an image with the specified name and tag based on the instructions in the Dockerfile. The . at the end indicates that the build context is the current directory.

Dockerfiles are crucial for creating reproducible and shareable environments, enabling developers to package their applications and dependencies into portable containers.

Docker Image:

A Docker image is a lightweight, standalone, and executable package that includes everything needed to run a piece of software, including the code, runtime, libraries, and system tools. Docker images serve as a portable and consistent unit that can be easily shared, distributed, and deployed across various environments. These images are the building blocks for containers, which are instances of running Docker images.

Here are key concepts related to Docker images:

Components of a Docker Image:

Base Image:

- The base image is the foundation of a Docker image. It includes the basic operating system and a minimal set of software packages. Images are often layered, and a base image serves as the starting point for additional layers that add application-specific code and configurations.

Layers:

- Docker images are composed of multiple layers. Each layer represents a set of file changes or instructions in the Dockerfile. Layers are cached, which allows for efficient image building and sharing of common layers across different images.

Dockerfile:

- The Dockerfile is a text document that contains a set of instructions for building a Docker image. It specifies the base image, adds application code, sets up the environment, and configures the containerized application.

Registry:

- Docker images can be stored in registries, which are repositories for sharing and distributing images. Docker Hub is a popular public registry, but organizations often set up private registries for internal use. Images can be pushed to and pulled from these registries.

Key Operations with Docker Images:

Building Images:

- Docker images are built using the

docker buildcommand, which reads instructions from a Dockerfile and creates an image based on those instructions. The resulting image is tagged with a name and version.

- Docker images are built using the

docker build -t image_name:tag .

Pulling Images:

- Docker images can be pulled from a registry using the

docker pullcommand. If an image is not available locally, Docker automatically fetches it from the specified registry.

- Docker images can be pulled from a registry using the

docker pull image_name:tag

Pushing Images:

- After building an image, it can be pushed to a registry to make it available for others. The

docker pushcommand is used for this purpose.

- After building an image, it can be pushed to a registry to make it available for others. The

docker push image_name:tag

Running Containers:

- Containers are instances of Docker images. They can be created and started using the

docker runcommand, specifying the image name and any necessary runtime options.

- Containers are instances of Docker images. They can be created and started using the

docker run image_name:tag

Inspecting Images:

- The

docker image inspectcommand provides detailed information about a Docker image, including its layers, configuration, and metadata.

- The

docker image inspect image_name:tag

Image Tagging and Versioning:

Tagging: Docker images are often tagged with a version to indicate different releases or versions of the software. Common tags include version numbers, such as

1.0orlatest, or custom tags based on release names.Versioning: Tagging and versioning ensure that images are version-controlled, making it clear which version of the software is included in a particular image.

Docker images play a central role in the containerization process, providing a standardized and reproducible way to package and distribute software along with its dependencies. They enable developers to build, test, and deploy applications consistently across various environments.

Docker Container:

A Docker container is a lightweight, standalone, and executable package that includes everything needed to run a piece of software, including the code, runtime, libraries, and system tools. Containers are instances of Docker images, which are created based on a set of instructions defined in a Dockerfile. Containers provide a consistent and isolated environment, ensuring that applications run consistently across various environments, from development to testing and production.

Here are key concepts related to Docker containers:

Key Characteristics of Docker Containers:

Lightweight:

- Containers share the host operating system's kernel, making them more lightweight than traditional virtual machines. They require fewer resources and start quickly.

Isolation:

- Containers provide process and file system isolation, ensuring that the application inside a container does not interfere with the host system or other containers. Each container runs as an isolated process.

Portability:

- Containers encapsulate everything needed to run an application, making them highly portable. Once a container is built, it can run on any system that supports Docker, regardless of the underlying infrastructure.

Reproducibility:

- Docker containers are built from Docker images, which are versioned and can be shared and reproduced easily. This ensures consistent and reproducible deployments across different environments.

Scalability:

- Containers are designed to be scalable. They can be easily replicated to handle increased workloads or scaled down during periods of low demand. Orchestration tools like Docker Swarm or Kubernetes facilitate container scaling.

Flexibility:

- Containers are flexible and support a microservices architecture. Applications can be broken down into smaller, independent services, each running in its own container.

Key Operations with Docker Containers:

Creating Containers:

- Containers are created using the

docker runcommand, specifying the Docker image to use. This command also allows the configuration of various options, such as environment variables, networking, and volume mounts.

- Containers are created using the

docker run image_name:tag

Managing Containers:

- The

docker pscommand lists running containers, anddocker ps -alists all containers, including stopped ones. Containers can be stopped, started, and removed using commands likedocker stop,docker start, anddocker rm.

- The

Interacting with Containers:

- The

docker execcommand allows for running commands inside a running container, facilitating interaction for debugging or administrative tasks.

- The

docker exec -it container_id /bin/bash

Inspecting Containers:

- The

docker inspectcommand provides detailed information about a container, including its configuration, network settings, and more.

- The

docker inspect container_id

Logs and Monitoring:

- The

docker logscommand allows you to view the logs of a running container. Docker also provides commands likedocker statsfor real-time monitoring of resource usage.

- The

docker logs container_id

Copying Files:

- The

docker cpcommand is used to copy files between the host system and a container or between containers.

- The

docker cp file.txt container_id:/path/to/destination

Docker containers have become a fundamental unit for packaging and deploying applications. They provide a consistent and reproducible environment, simplify development workflows, and enhance scalability and resource efficiency in modern software development and deployment practices.

Tasks:

Use the

docker runcommand to start a new container and interact with it through the command line. [Hint: docker run hello-world]docker run hello-worldUse the

docker inspectcommand to view detailed information about a container or image.docker inspect <container_or_image_id>Use the

docker statscommand to view resource usage statistics for one or more containers.

docker port <container_id>

- Use the

docker statscommand to view resource usage statistics for one or more containers.

docker stats [container_name or container_id]

Use the

docker topcommand to view the processes running inside a container.docker top <container_id>Use the

docker savecommand to save an image to a tar archive.

docker save -o <output_file.tar> <image_name>

Use the

docker loadcommand to load an image from a tar archive.docker load -i <input_file.tar>

Now We have completed Docker Basic. Some Docker Project we will start the next Blog!